Cache memory plays a pivotal role in modern computing, bridging the CPU’s ultra-fast processing capabilities and the comparatively slower main memory. By storing frequently accessed data closer to the processor, cache memory significantly enhances performance, reduces latency, and optimizes a computer system’s overall efficiency. This article delves into the essentials of cache memory, its architecture, and its profound impact on CPU performance.

Understanding Cache Memory

Cache memory is a specialized form of high-speed storage located directly on or near the CPU. It is designed to provide quick access to frequently used data and instructions, minimizing the need for the processor to fetch data from the slower main memory.

What is Cache Memory?

Cache memory is a small, ultra-fast type of volatile memory that temporarily stores copies of data and instructions that the CPU is likely to reuse. This memory operates faster than RAM, allowing it to keep pace with the CPU’s rapid processing demands. It acts as a buffer between the CPU and the main memory, ensuring the processor has immediate access to critical data.

Cache memory is divided into levels—L1, L2, and L3—each offering varying speeds and capacities. These levels work in unison to improve data retrieval efficiency, ensuring that the CPU can perform at its optimal speed without being bogged down by memory access delays.

Why Cache Memory Matters

The importance of cache memory lies in its ability to mitigate the bottleneck created by the disparity in speed between the CPU and RAM. Without cache memory, the CPU would spend a significant portion of its time waiting for data to be fetched from the main memory, leading to underutilization of its processing capabilities.

Cache memory reduces this latency by preloading frequently accessed data and instructions. As a result, it not only accelerates processing but also ensures smoother task execution, particularly in applications that require real-time performance, such as gaming, video editing, and data-intensive computations.

The Evolution of Cache Memory

The concept of cache memory has evolved significantly over the years. Early computers lacked this feature, leading to slower processing speeds. With advancements in microprocessor technology, the introduction of cache memory revolutionized computing, enabling processors to handle increasingly complex tasks efficiently.

Modern processors now incorporate multi-level cache architectures, offering a layered approach to data access. These advancements have allowed CPUs to keep up with the growing demands of software applications and data processing.

The Architecture of Cache Memory

The architecture of cache memory is intricately designed to balance speed, capacity, and cost. Understanding its structure and operational principles provides insight into how it enhances CPU performance.

Levels of Cache Memory

Cache memory is typically organized into three levels: L1, L2, and L3. Each level has distinct characteristics and serves specific purposes:

- L1 Cache: The smallest and fastest cache located directly on the CPU core. It is divided into two sections: one for instructions (instruction cache) and one for data (data cache). L1 cache provides immediate access to critical data but has limited capacity.

- L2 Cache: Larger and slightly slower than L1 cache, L2 cache serves as an intermediary storage level. Depending on the processor design, it can be shared among multiple CPU cores or dedicated to a single core.

- L3 Cache: The largest and slowest cache, L3, is shared across all CPU cores. It acts as a repository for data that does not fit into L1 or L2, ensuring that the CPU can still access frequently used data without relying on main memory.

Cache Mapping Techniques

Cache memory uses specific mapping techniques to determine how data is stored and retrieved:

- Direct Mapping: Each main memory block is mapped to a specific cache line. While simple, this method can lead to conflicts when multiple memory blocks compete for the same cache line.

- Associative Mapping: Allows data to be stored in any available cache line, reducing conflicts but requiring more complex hardware.

- Set-Associative Mapping: This method combines direct and associative mapping, dividing the cache into sets and mapping memory blocks to specific sets. It balances complexity and efficiency.

Replacement Policies in Cache Memory

When the cache is full, replacement policies determine which data is evicted to make room for new data:

- Least Recently Used (LRU): Evicts the least recently accessed data.

- First In, First Out (FIFO): Removes the oldest data in the cache.

- Random Replacement: Selects a random cache line for eviction.

These policies aim to maximize cache utilization and minimize the impact of cache misses.

The Impact of Cache Memory on CPU Performance

Cache memory significantly influences CPU performance, enabling faster data access and improved multitasking capabilities. Its design and operation are critical to achieving optimal system performance.

Reducing Latency with Cache Memory

Latency, the time delay in data transfer, is a major performance bottleneck in computing systems. Cache memory addresses this issue by storing frequently accessed data close to the CPU, drastically reducing the time required for data retrieval. This is particularly important in applications that demand real-time performance, such as gaming, video editing, and scientific simulations.

By minimizing latency, cache memory ensures the CPU can process tasks seamlessly, improving the overall user experience and system responsiveness.

Enhancing Multitasking and Application Performance

Modern computing often involves running multiple applications simultaneously. Cache memory plays a crucial role in multitasking by ensuring the CPU can access necessary data without delay. This prevents performance degradation and allows users to switch between tasks effortlessly.

Applications that rely on repetitive data access, such as web browsers, database management systems, and software compilers, benefit significantly from cache memory. These applications experience faster load times and smoother execution for the CPU’s ability to retrieve data from the cache.

Balancing Cost and Efficiency

While cache memory offers unparalleled speed advantages, it is also more expensive to manufacture than other types of memory. This cost consideration limits the size of cache memory in processors, requiring manufacturers to strike a balance between performance and affordability.

Modern processors address this using multi-level cache architectures, optimizing cost and efficiency. This approach ensures that critical data is stored in the fastest memory tiers, while less frequently used data is stored in slower but more cost-effective storage solutions.

Challenges and Future of Cache Memory

As computing demands evolve, cache memory faces new challenges and opportunities. Technology innovations aim to overcome these hurdles and further enhance CPU performance.

Addressing Cache Coherence Issues

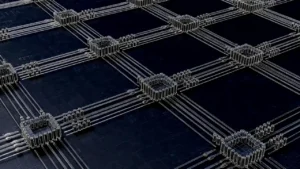

Maintaining cache coherence—ensuring that all cores have consistent views of data—is a significant challenge in multi-core processors. Advanced coherence protocols, such as MESI (Modified, Exclusive, Shared, Invalid), are being developed to address this issue and ensure efficient data sharing among cores.

Overcoming Scalability Limitations

As processors incorporate more cores and larger caches, scalability becomes a concern. Designers are exploring new architectures, such as non-uniform cache access (NUCA), to address these limitations and improve cache performance in high-core-count systems.

Innovations in Cache Memory Design

Emerging technologies, such as 3D stacking and phase-change memory, promise to revolutionize cache memory design. These innovations aim to increase cache capacity, reduce latency, and improve energy efficiency, paving the way for faster and more powerful processors.

Conclusion

Cache memory is a cornerstone of modern computing. It enables CPUs to deliver exceptional performance by minimizing latency and optimizing data access. Its multi-level architecture, mapping techniques, and replacement policies ensure critical data is always within the processor’s reach. As technology advances, cache memory will remain vital in meeting the ever-growing demands of computing systems. Understanding its role and operation is essential for anyone looking to maximize the efficiency and performance of their computing devices.